Explaining artificial intelligence predictions of disease progression with semantic similarity

- Conference/Journal: CLEF

- Year: 2022

- Full paper URL: https://ceur-ws.org/Vol-3180/paper-92.pdf

Abstract

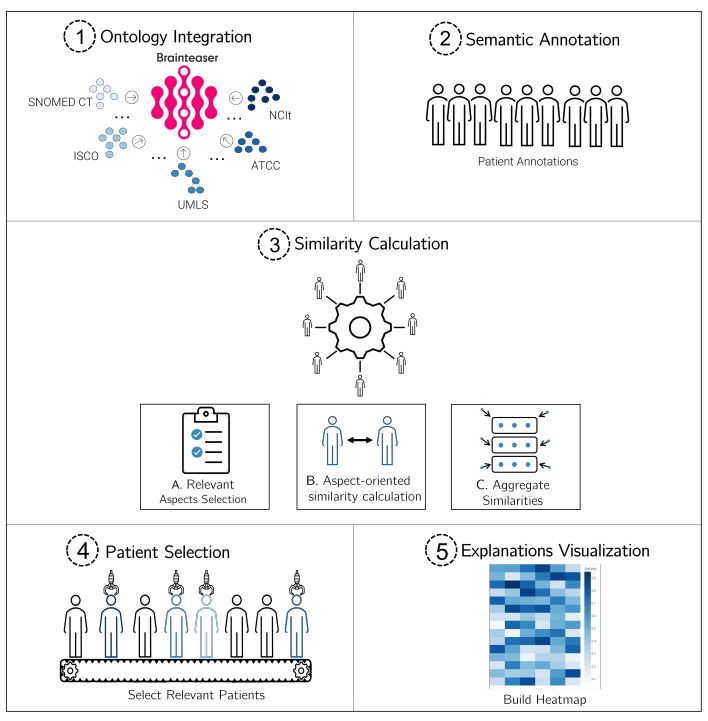

The complexity of neurodegenerative diseases has motivated the development of artificial intelligence approaches to predicting risk of impairment and disease progression. However, and despite the success of these approaches, their mostly black-box nature hinders their adoption for disease management. Explainable artificial intelligence holds the promise to bridge this gap by producing explanations of models and their predictions that promote understanding and trust by users. In the biomedical domain, given its complexity, explainable artificial intelligence approaches have much to benefit from being able to link models to representations of domain knowledge – ontologies. Ontologies afford more explainable features because they are semantically enriched and contextualized and as such can be better understood by end users; and they also model existing knowledge, and thus support inquiry into how a given artificial intelligence model outcome fits with existing scientific knowledge. We propose an explainability approach that leverages on the rich panorama of biomedical ontologies to build semantic similarity-based explanations that contextualize patient data and artificial intelligence predictions. These explanations mirror a fundamental human explanatory mechanism - similarity - while tackling the challenges of data complexity, heterogeneity and size.